Automate content moderation for brand safety

Reduce manual review with AI content screening to protect your community, sponsors, and reputation.

Lorem ipsum dolor est un amet, une élite de consécration. Suspendre divers jeans en éros élémentaires tristiques. Ce cours, je vivrai à l'orage, je n'ai que des couleurs intermédiaires nulles, mais je suis en liberté de vie. Aenean faucibus nibh et juste cursus de rutrum lorem imperdit. Il n'y a pas de vitae risus tristique posurement.

Adhere to your existing content policies and save moderator time

Understand changes in objectionable content to remove it faster

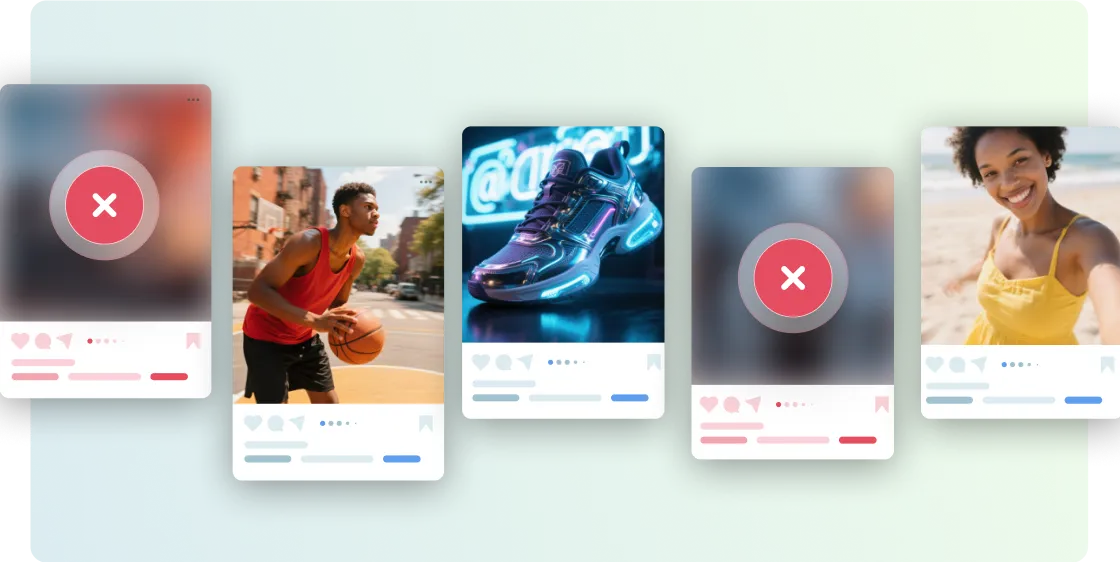

Automate UGC evaluation

Process user-generated images, video, and audio in real-time with a customizable, multimodal AI engine to approve appropriate content and weed out objectionable posts.

Fandom is the world’s largest online fan platform. They trust Coactive AI to moderate 2.2 million posts each month and 90% of uploads are handled without human review.

Automatically remove content that violates your terms of service

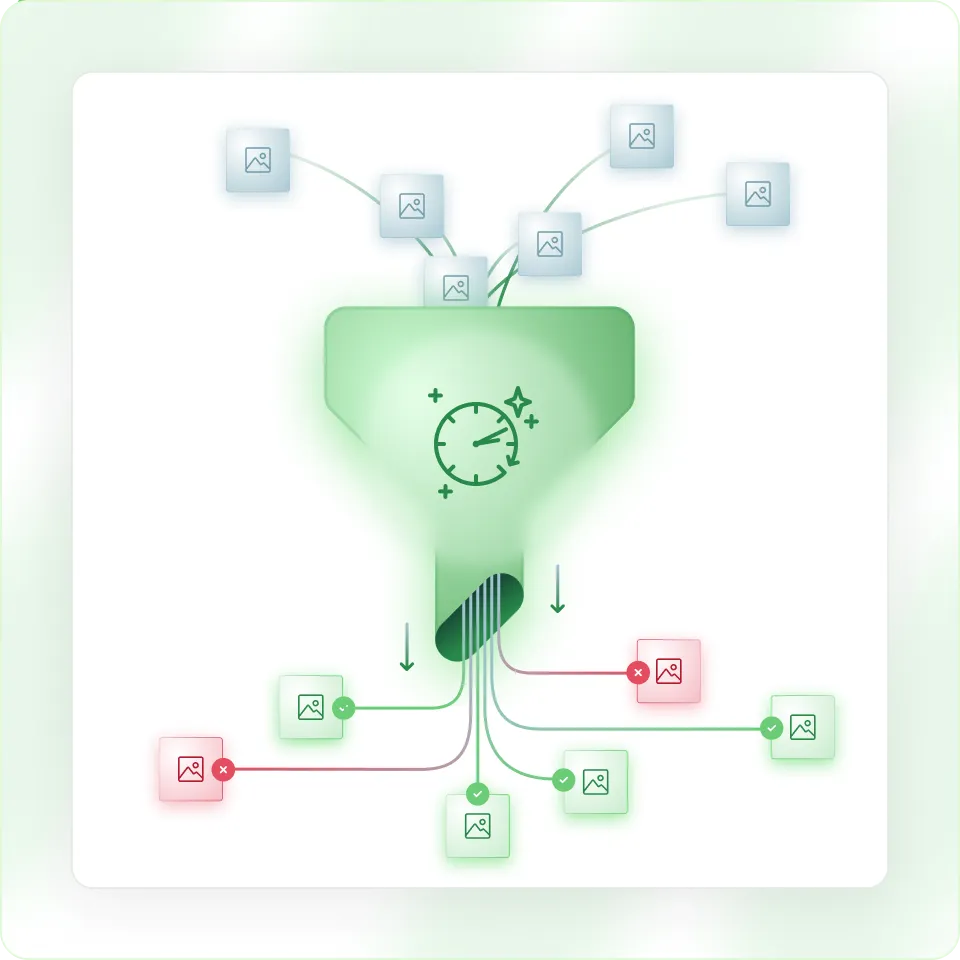

Moderate the bulk of user-generated content uploads automatically, so that humans can:

This boosts efficiency and accuracy, reduces overall content moderation cost, and improves team wellbeing.

With Coactive, Fandom reduced manual moderation time by 74% and improved moderation team morale by limiting exposure to disturbing content.

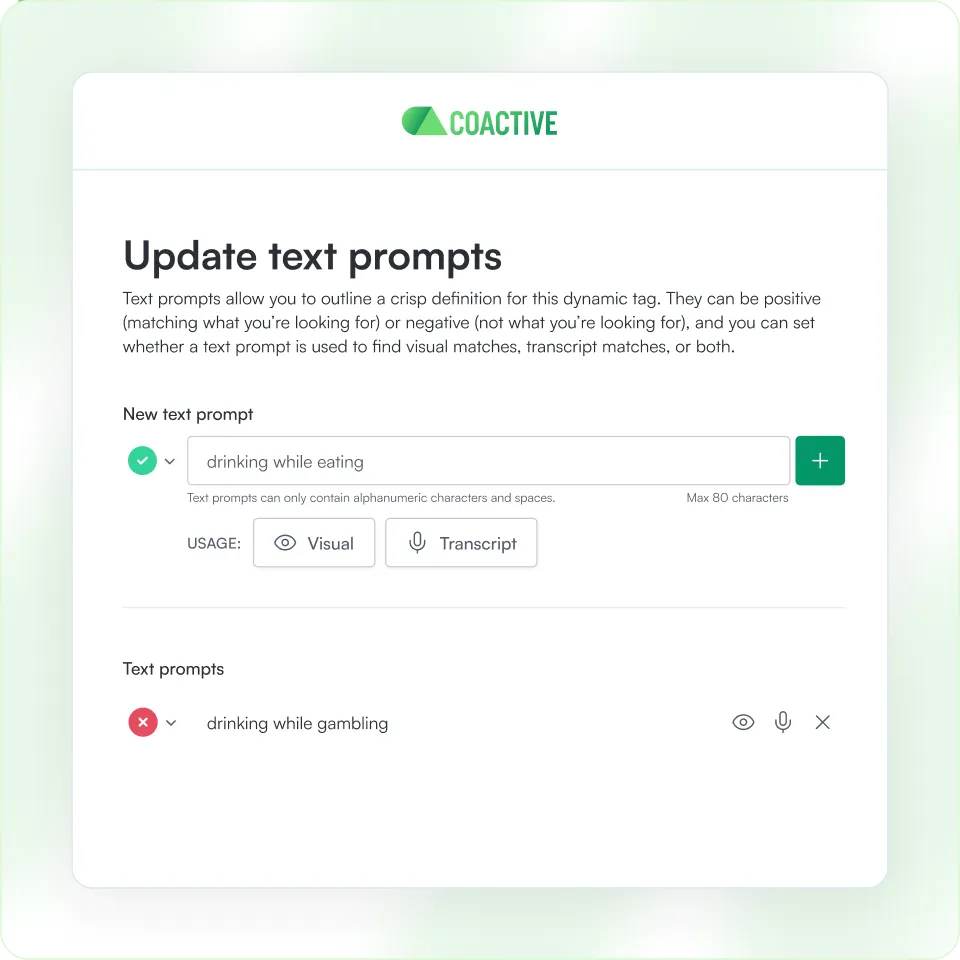

Keep up with evolving definitions of unacceptable content

Policy changes quickly. Rapidly fine-tune your text and visual results based on changing definitions of what harmful content is and get objectionable content removed faster.

This keeps users safe and avoids reputational damage, advertiser backlash, and regulatory scrutiny.

Coactive helped Fandom reduce content takedown handle time from hours to seconds.

Content Moderation

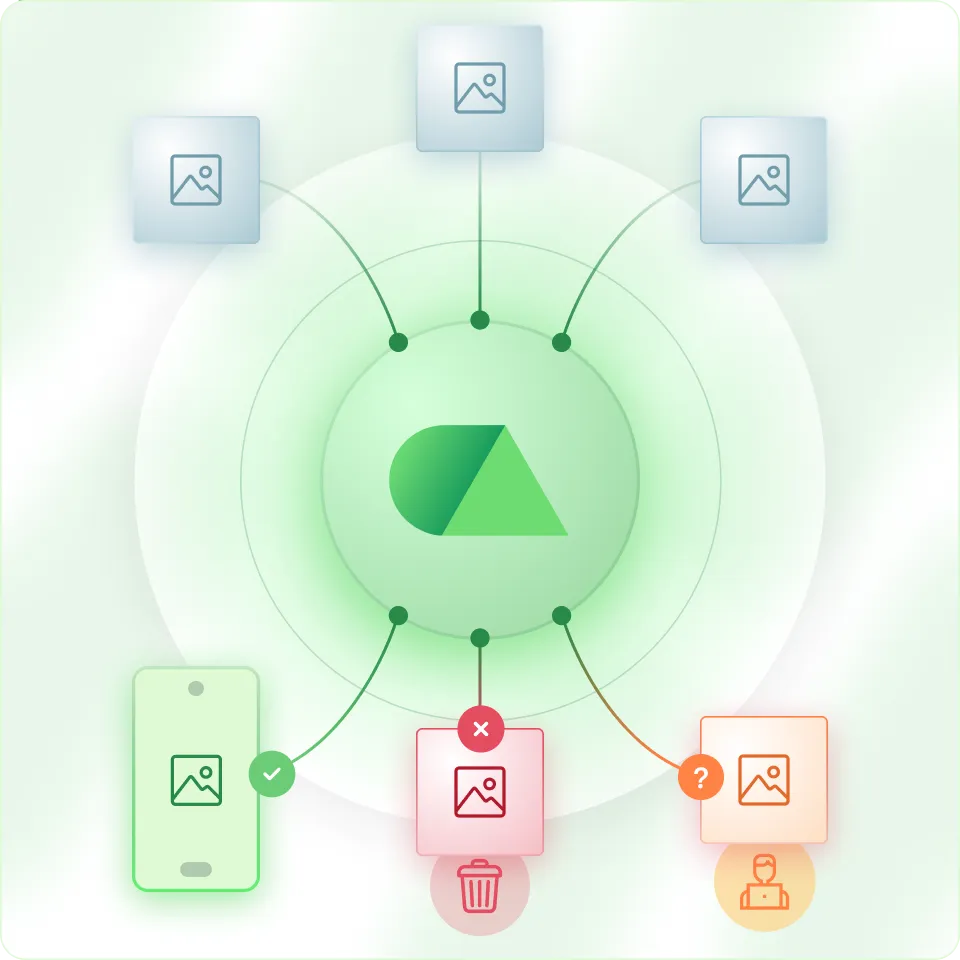

Multimodal AI for brand safety

Standardize automated content tagging based on platform-specific policies without the manual work.

Define scoring thresholds to auto-approve safe content, auto-reject harmful content, and route edge cases for human review.

Process images in seconds, allowing moderation to happen at or near upload time.

Improve performance over time using content moderators’ decisions on edge cases, which feed directly back into the AI model.

Understand which content violates terms of service and keep it off the platform automatically.