Monetize media content with multimodal AI

Unlock the next generation of ad targeting, content discovery, and brand safety.

Lorem ipsum dolor est un amet, une élite de consécration. Suspendre divers jeans en éros élémentaires tristiques. Ce cours, je vivrai à l'orage, je n'ai que des couleurs intermédiaires nulles, mais je suis en liberté de vie. Aenean faucibus nibh et juste cursus de rutrum lorem imperdit. Il n'y a pas de vitae risus tristique posurement.

Ensure brand trust and safety at scale to protect users, advertisers, and your brand.

Search your library using natural language, concept similarity, or specific content cues—across formats and legacy systems.

Drive content analytics, categorization, and monetization workflows for data-driven insights and smarter decisions.

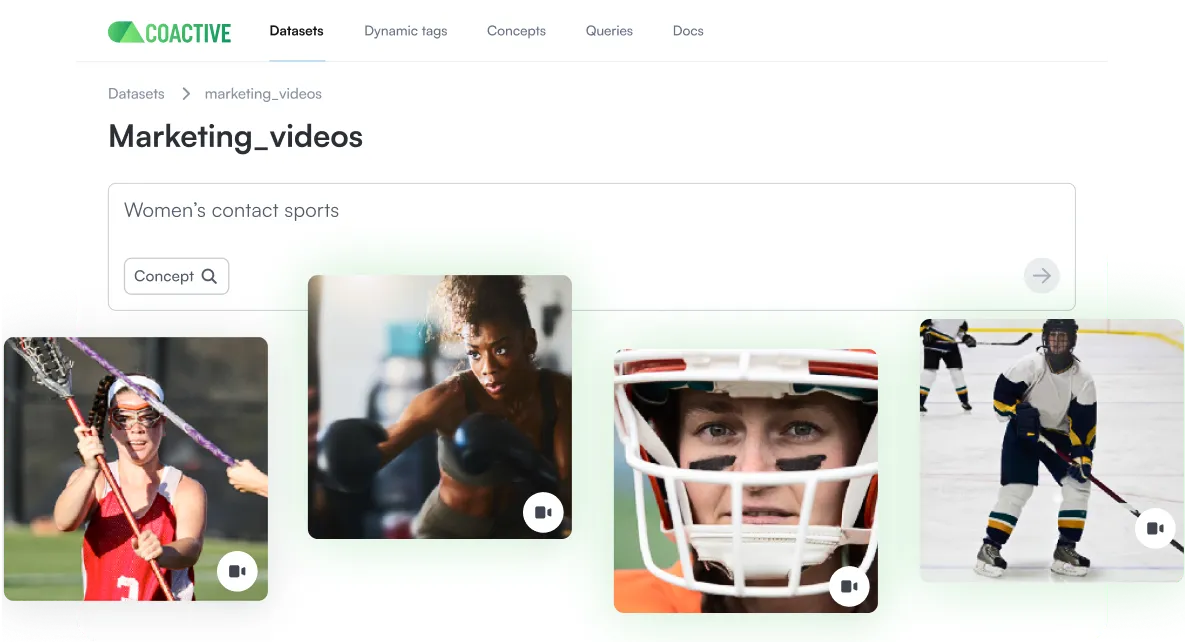

Deliver targeted content and ads

Understand your content to pick the right moment

Use a rich, contextual understanding of your content to deliver highly personalized content recommendations and targeted ads. Personalize by emotion, action, or abstract concepts like "uplifting moments."

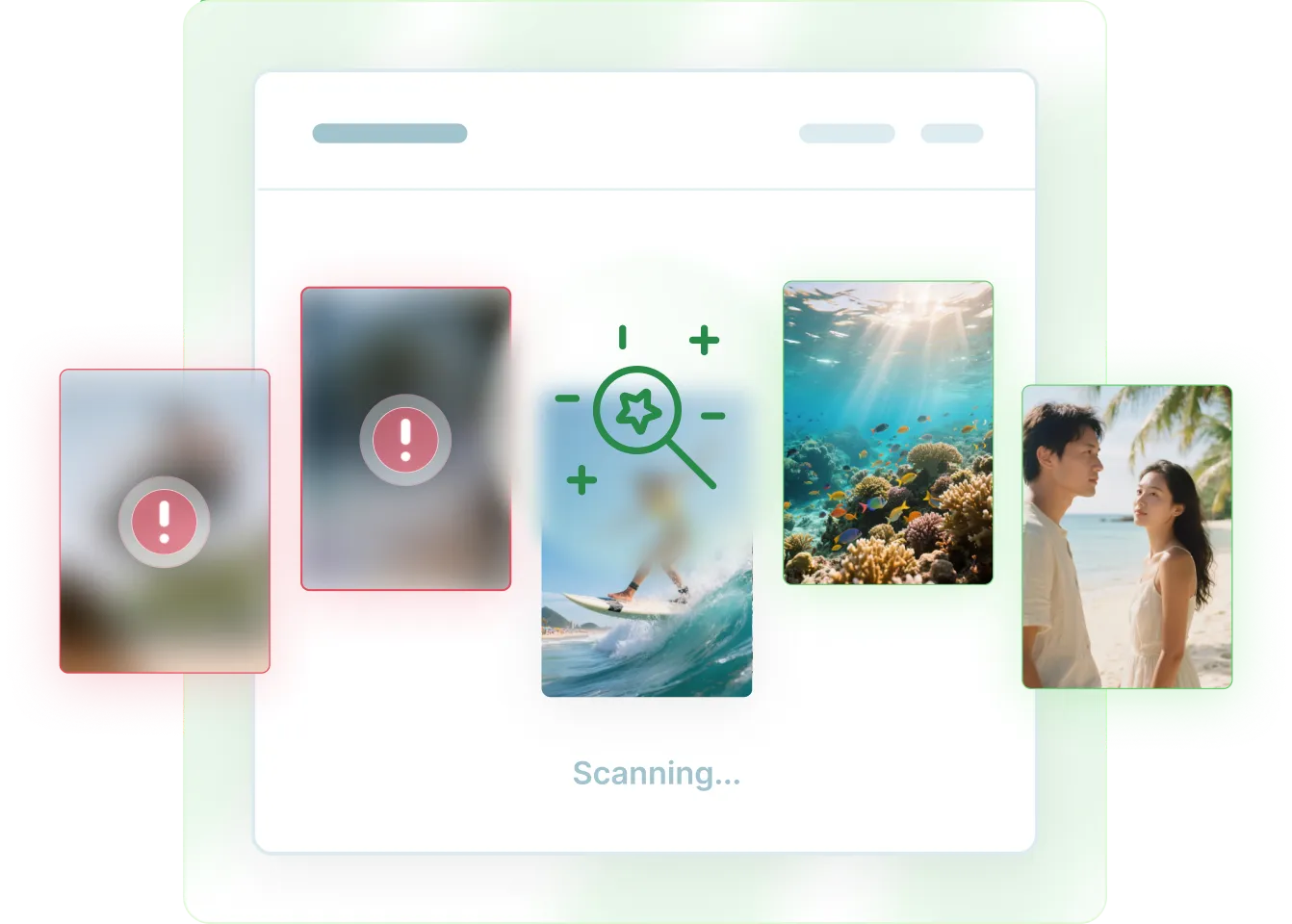

Ensure brand trust and safety

Approve, deny, and flag content in milliseconds

Coactive enables enterprise-grade UGC moderation through its multimodal AI engine that fine-tunes AI for customized content categories, defines nuanced taxonomies to reflect platform-specific policies, scores and thresholds content, and routes edge cases for human review.

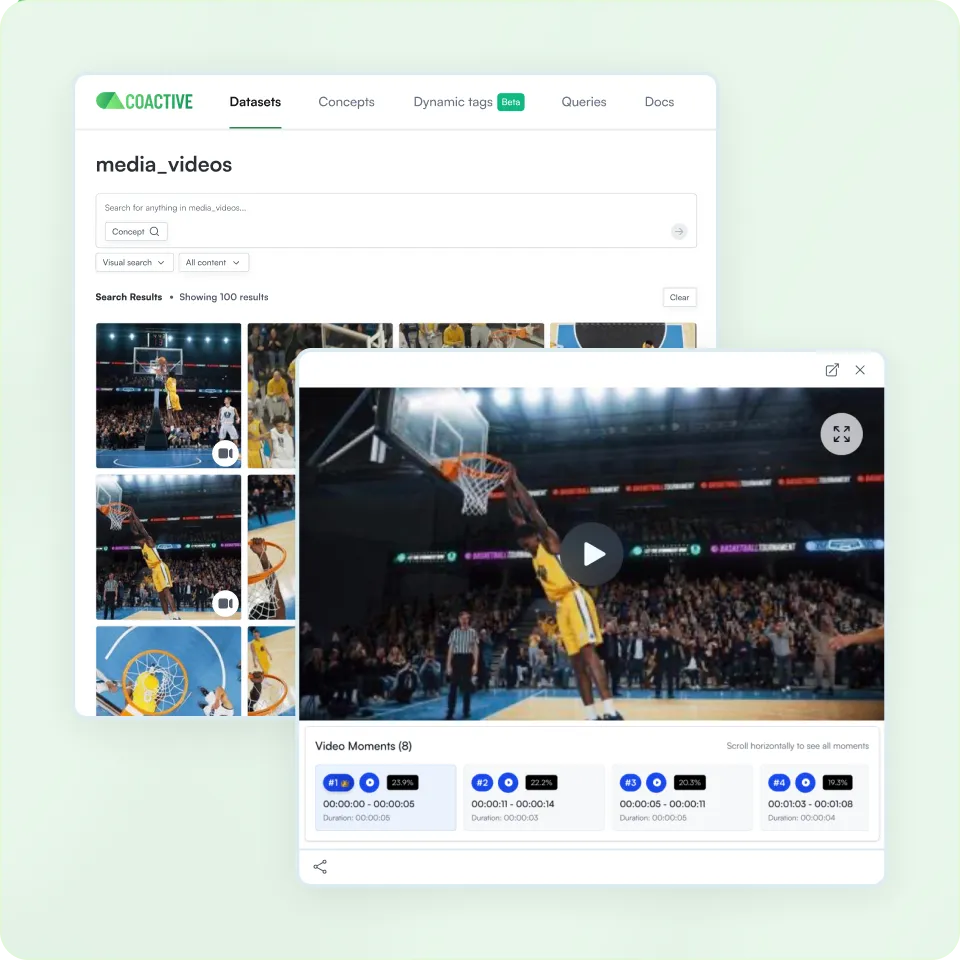

Enhance search and user experience

Harness natural language and visual prompts

Empower your applications with semantic search capabilities, derived from multimodal embeddings across images, audio, and video. Coactive search finds the perfect moment in an instant, without the need for pre-existing metadata or keywords.

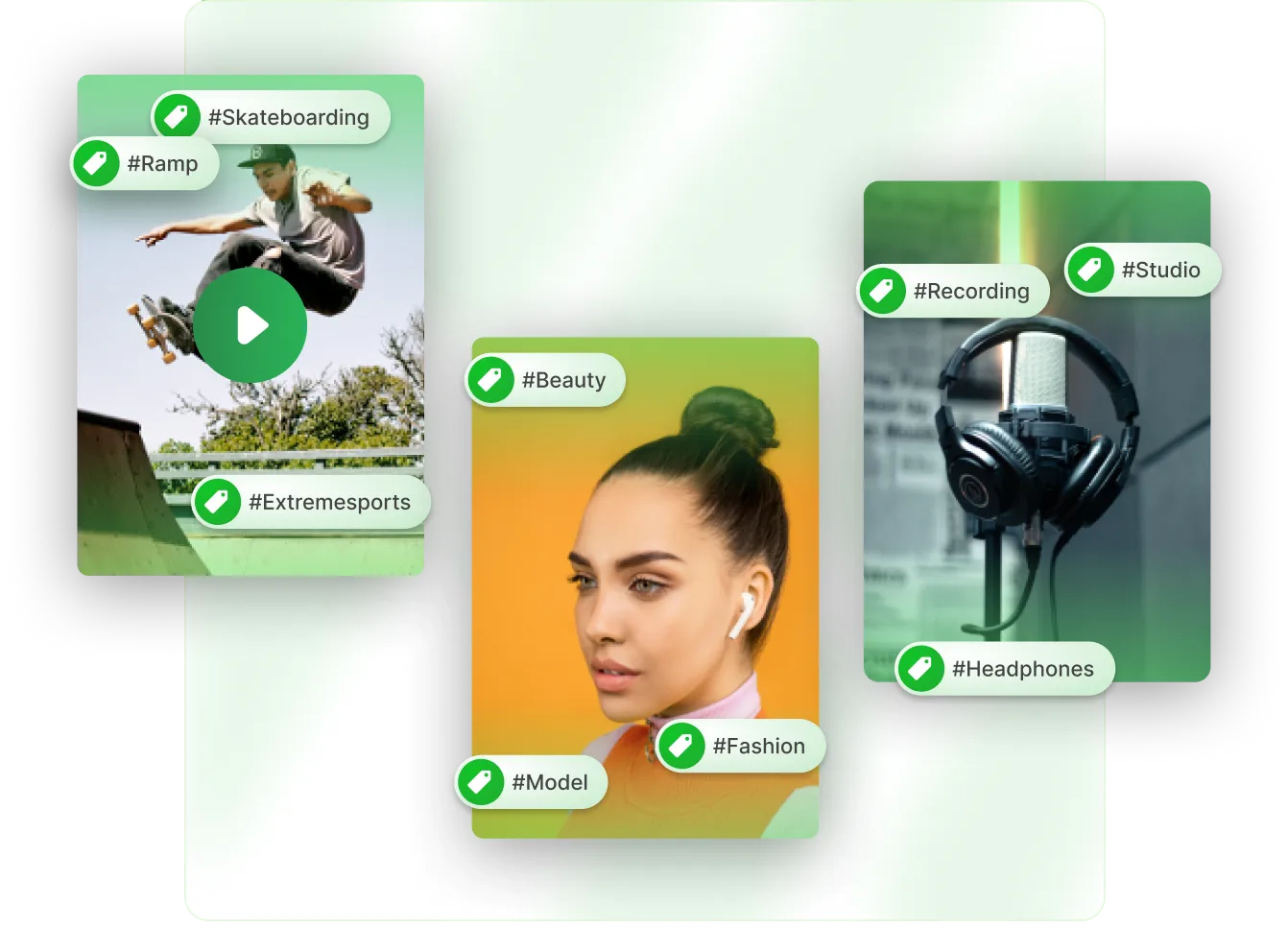

Optimize content performance

Drive content analytics, categorization, compliance, and monetization workflows

Bring rich content signals into your analytics workflows to gain data-driven insights into content performance and make smarter decisions. Generate intent-based, structured metadata (such as: themes, activities, scene types, object detection, or summaries) by applying standard industry taxonomies (e.g. Interactive Advertising Bureau and International Press Telecommunications Council).

Get more value from video and images at scale with Coactive

Extract rich, contextual signals from your content, including video, image, and audio.

Automatically classify and easily fine-tune new and niche content types, with no pre-training required.

Coactive easily handles large content volumes. Process petabytes of visual content quickly and cost-efficiently.

Launch new experiences and applications faster by processing visual content once and reusing generated embeddings repeatedly.

Power agentic and AI-native applications with temporal relationships across shot, scene, video, and library.

Why Media and Entertainment Leaders Trust Coactive

Bring your own model and leverage best-of-breed foundation models

Fine-tuning is portable and transferable across models

Embeddings are reusable across tasks

Horizontally scales to process millions of hours of content

Real-time metadata generation at industrial scale

Proven throughput: Up to 2,000 hours of video/hour*

Intelligent keyframe sampling cuts compute costs by 6–10x

Cached outputs eliminate redundant processing

One processing pass powers: search, summarization, metadata, and analytics

Fine-tuning layer decoupled from base models

Rapid iteration without re-running foundation models

Create custom attributes, concepts, and object-level classifiers

*Based on an actual customer experience. Ingestion performance is dependent on specific conditions, and your experience may vary.

Fandom reduces moderation time by 74% for tens of millions of assets

"With Coactive, Fandom automated content moderation and visual search across millions of assets saving time and improving accuracy."